This article explains how to build a neural network and how to train and evaluate it with TensorFlow 2. It is assumed you know basics of machine & deep learning and want to build model in Tensorflow environment. We are going to use tf.keras APIs which allows to design, fit, evaluate, and use deep learning models to make predictions in just a few lines of code.

Setup Environment

Read following tutorial to setup ML/DL environment with TensorFlow 2:

Setup Deep Learning environment: Tensorflow, Jupyter Notebook and VS Code

After installation and setting up environment, create a new Jupyter notebook by selecting "Python: Create Blank New Jupyter Notebook" command in VS Code Command Palette (CTRL + SHIFT + P) and import TensorFlow into your program:

import tensorflow as tf

Dataset

We are going to use MNIST dataset which has 60000 training and 10000 testing images size of 28x28 with a label of 10 classes. It involves handwritten digits that must be classified as a number between 0 and 9.

First time, when you run the following code, it will download the dataset of handwritten digits:

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

On Windows 10, the download is on following path:

C:\Users\[UserName]\.keras\datasets

x_train and x_test are training and testing images (pixel) repesctively.

y_train and y_test are classes

For better model, pixel data (0-255) are transformed into the range 0-1.

x_train, x_test = x_train / 255.0, x_test / 255.0

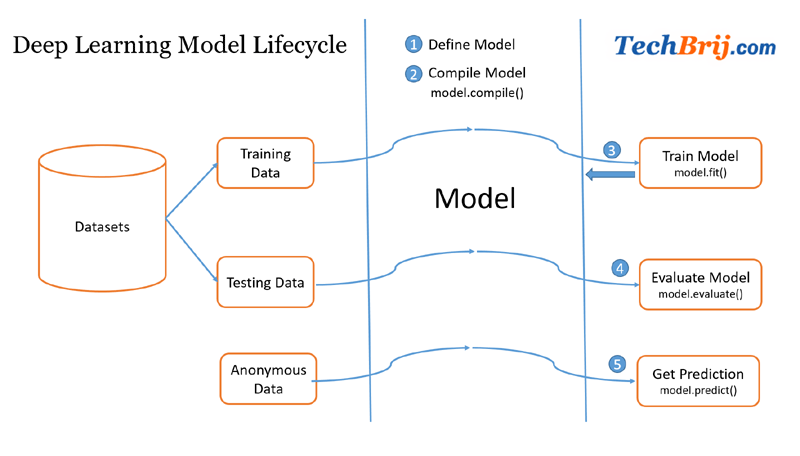

Model Lifecycle

The five steps in the life-cycle are as follows:

1. Define the model: using the Sequential or Model class and add the layers

2. Compile the model: call compile method and specify the loss, optimizer and metrics

3. Train the model: call fit method and use training data

4. Evaluate the model: call evaluate method and use testing data to evaluate trained model

5. Get predictions: use predict method on new data for predictions

Define the Model

Let's build the tf.keras.Sequential model by stacking layers.

model = tf.keras.models.Sequential([

.... layers ...

])

Here are some frequently used tf.keras layers:

Flatten: takes N dimensional input and turns it into a 1-dimensional set. Generally, used in CNN after feature extraction.

Dense: It adds a layer of neurons and fully connected neurons to the previous layer. It Implements the following operation:

output = activation(X * W + bias)

Activation: Each layer of neurons needs an activation function to tell them what to do. Relu and Softmax are popular options.

- Relu: means "If X>0 return X, else return 0" so what it does it only passes values 0 or greater to the next layer in the network.

- Softmax: takes a set of values, and effectively picks the biggest one. for example, if the output of the last layer looks like [0.1, 6.3, 0.05, 0.1, 0.5] it checks the biggest value and turns it into [0,1,0,0,0]

Dropout: It is used to prevent overfitting (High training accuracy but low testing accuracy). it works by randomly deactivation a set of neurons in a given layer according to a predefined probability rate.

Conv2D: used for 2D convolution to train a set of kernels mainly on image datasets.

Consider the following model:

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

In above model, first Flatten layer converting the 2D 28x28 array to a 1D 784 array.

second Dense layer has 128 neurons. Each neuron (or node) takes input from all 784 nodes in the previous layer, weighting that input according to hidden parameters which will be learned during training, and outputs a single value to the next layer.

The last Dense layer has 10 neurons because we have 10 different types of classes in our data. You get the predictions of the model from this layer.

Compile the model

Model compile method requires loss, optimizer and metrics parameters.

Three most common loss functions are:

mean_squared_error: for regression

binary_crossentropy: for binary classification

sparse_categorical_crossentropy: for multi class classification

stochastic gradient descent (SGD) and Adam are most used optimizers and accuracy is common metrics.

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

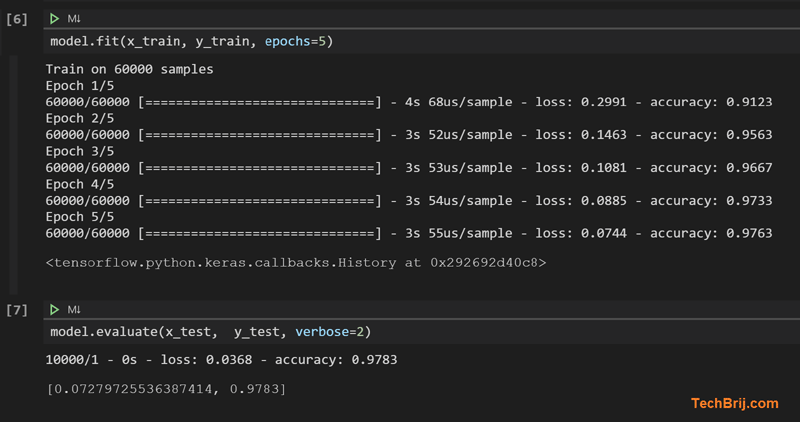

Train the model

model.fit(x_train, y_train, epochs=5)

Evaluate the model

model.evaluate(x_test, y_test, verbose=2)

The image classifier is now trained to ~98% accuracy on this dataset.

Prediction

For simplicity, let's get prediction on first test image:

import numpy as np

img = np.array([x_test[0]])

predictions = model.predict(img)

predicted_class = np.argmax(predictions[0])

original_class = y_test[0]

print('Original class: {} \nPredicted class: {}'.format(original_class, predicted_class))

The result is following:

Original class: 7

Predicted class: 7

Conclusion

So, you made your first machine learning model and got prediction!

It is introductory post to show how TensorFlow 2 can be used to build machine learning model. It includes different components of tf.keras, deep learning model lifecycle (to define, compile, train, evaluate models & get prediction) and the workflow.

Enjoy TensorFlow !!